Just accomplished the short course of pretraining LLM from deeplearning.ai – link

What a good use of a Wednesday night 1 hour’s time, otherwise, I probably will be watching youtube shorts while drinking a beer 🙂

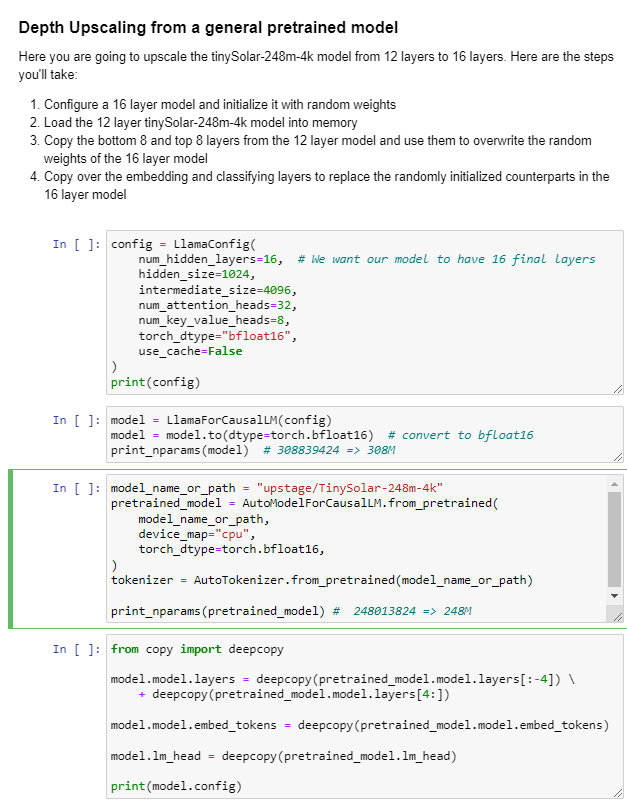

In this tutorial, the instructor Sung(CEO) and Lucy(CSO) from upstage walked through the key steps in training a LLM by using huggingface libraries, mostly importantly, they introduced several techniques like depth upscaling and downsizing to accelerate the pretraining by leveraging weights from existing models but with a different configuration.

The chapter that I personally liked the most is the Model Initialization, this is the step where you customize the configuration and initializes the weights.

For example, here they customized a new model with 16 layers (~308M parameters) and initialized the weights by simply concatenating the first 8 layers from a 12 layers pretrained model and then take the last 8 from the same model. it is like 1,2,3,4, (5,6,7,8,) (5,6,7,8,) 9,10,11,12 and somehow the generate texts have some linguistic coherence instead of completely gibberish.

They claim the depth upscaling and save the training cost by a whopping 70%.

Here is the paper published by upstage AI.

LlamaConfig {

"_name_or_path": "./models/upstage/TinySolar-248m-4k",

"architectures": [

"LlamaForCausalLM"

],

"attention_bias": false,

"attention_dropout": 0.0,

"bos_token_id": 1,

"eos_token_id": 2,

"hidden_act": "silu",

"hidden_size": 1024,

"initializer_range": 0.02,

"intermediate_size": 4096,

"max_position_embeddings": 32768,

"model_type": "llama",

"num_attention_heads": 32,

"num_hidden_layers": 12,

"num_key_value_heads": 8,

"pretraining_tp": 1,

"rms_norm_eps": 1e-06,

"rope_scaling": null,

"rope_theta": 10000.0,

"tie_word_embeddings": false,

"torch_dtype": "bfloat16",

"transformers_version": "4.37.2",

"use_cache": true,

"vocab_size": 32000

}