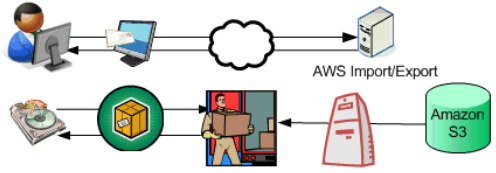

Do you believe AWS has such a unbelievable service call “Import/Export“. Literally, it is a service that requires the customers to “MAIL” the storage device (USB, disk…) to AWS, Yes, you heard me, without using the modern network cable.

Actually, there are so many interesting conversations around comparing sending data via cable versus other channels. Here is an interesting post from BBC technology, where:

Ten USB key-laden pigeons were released from a Yorkshire farm at the same time a five-minute video upload was begun.

An hour and a quarter later, the pigeons had reached their destination in Skegness 120km away, while only 24% of a 300MB file had uploaded.

Also, that pigeon was not alone while several other experiments have also been conducted.

Last year a similar experiment in Durban, South Africa saw Winston the pigeon take two hours to finish a 96km journey. In the same time just 4% of a 4GB file had downloaded.

Now, lets do a simple calculation.

The internet speed is usually tied to the ISP(Internet Service Provider) and the network hardware. AWS SDK contains some softwares that can run uploading in multithreading mode where the upload speed could be increased to a different order of magnitude.

I tested downloading a 1.8G file from S3 to my local and it took about 5 minutes to accomplish. The average speed is about 7Mps. If we assume the download speed is 10 Mps. And you have 10 TB file that you need to move from your datacenter to S3. Theoretically, it will take (10 T * 1000G/T * 1000 M/G) / (10 M/sec * 60 sec/min * 60 min/h * 24 h/d ), in another way, it will take 12 days nonstopable to send 10TB data to S3 using the AWS command line console. In that way, copy your data to some sort of portable drives directly. And then just go to UPS and send a package to AWS. It will take about USD200- based on a rough estimation from the AWS cost calculator.

12 days (Tradition Uploading) = USD200 + 2 days (AWS Import/Export)

Not bad, Not bad 🙂

I know that your data size and your internet speed might be different due to all kinds of reasons. Here is a quick rule of thumb to estimate if AWS Import/Export is even a choice you should consider.

The turn around to use AWS is 2 days (1 day to burn USB&ship and another day for AWS to load data).

Say your download/upload speed is S Mps, and the total size of dataset is D T.

D * 1000,000 / S = 2 * (24 * 60 * 60 )

To quickly estimate the total download time in days. An estimation is:

D / S * (1000,000/(24* 60 * 60)) = 11.6D/S ~ 10D/S.

In that way, say my internet speed is 7Mps, and the data I am going to load is 2 TB.

In that way I can quickly estimate that 10D/S=10 * 2 / 7 = 3 d, which I might need to think about using AWS import/export. But if my boss told me “dude, you need to load this 10TB data quickly to the S3”. Then I scratch on the back of the napkin that it will take 10 * 10 / 7 ~ 14 days… then I will just ask for 2 hundred bills and walk straight to UPS.