I am trying to figure out a way to do real time transcribing and managed to got open ai whisper run and running. This post is for me to write down what I did and some of the learnings.

Download Content

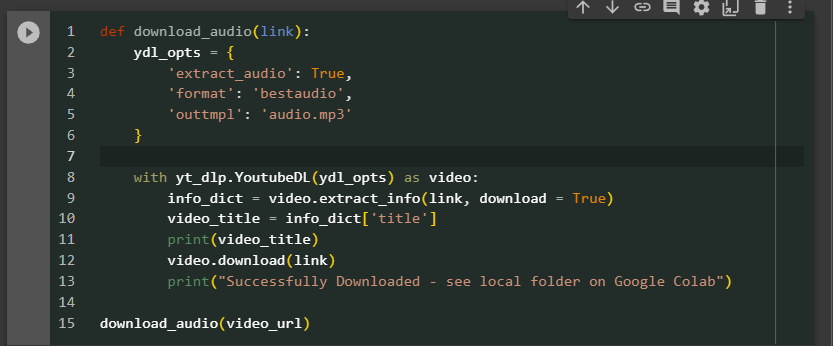

The first thing you need is content, the quickest way is of course to download the content from youtube. There are many online tools that can help save you some key strokes. However, if often you need to work with youtube videos, it saves you more time by figuring out a programmatic way. I found two libraries that worked well for me, the youtube-dl and yt_dlp.

It is very convenient to use it.

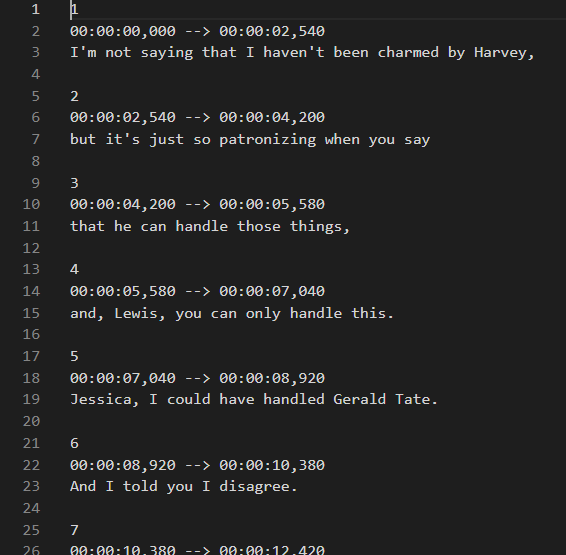

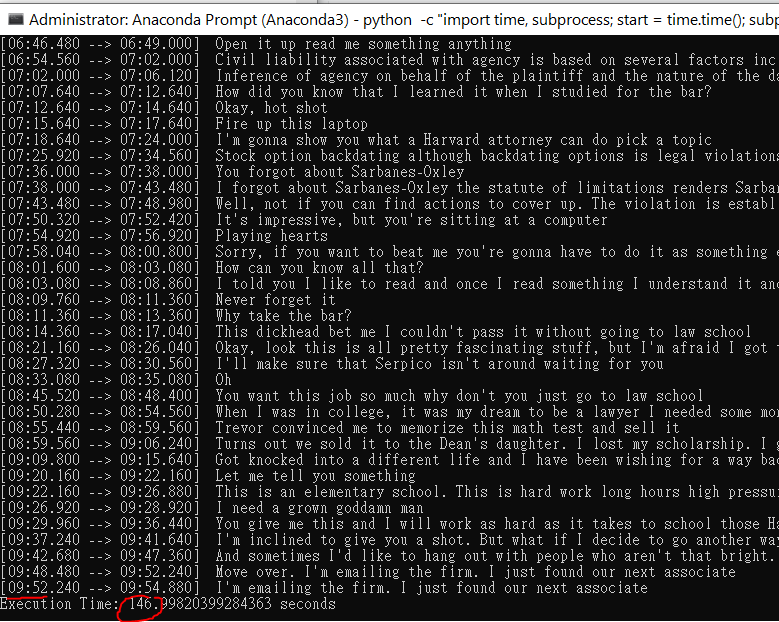

now you got 10 minutes good quality of a youtube audio and yt_dlp also supports download video with various options. (below is a screenshot of a 10 minutes video, the 1st scene of the TV show suits). This TV shows is spoken in American English quite fast paced and contains unfamiliar terms often because its plot is about stories between lawyers.

Install and Set up Whisper

This step takes me a bit longer than expected.

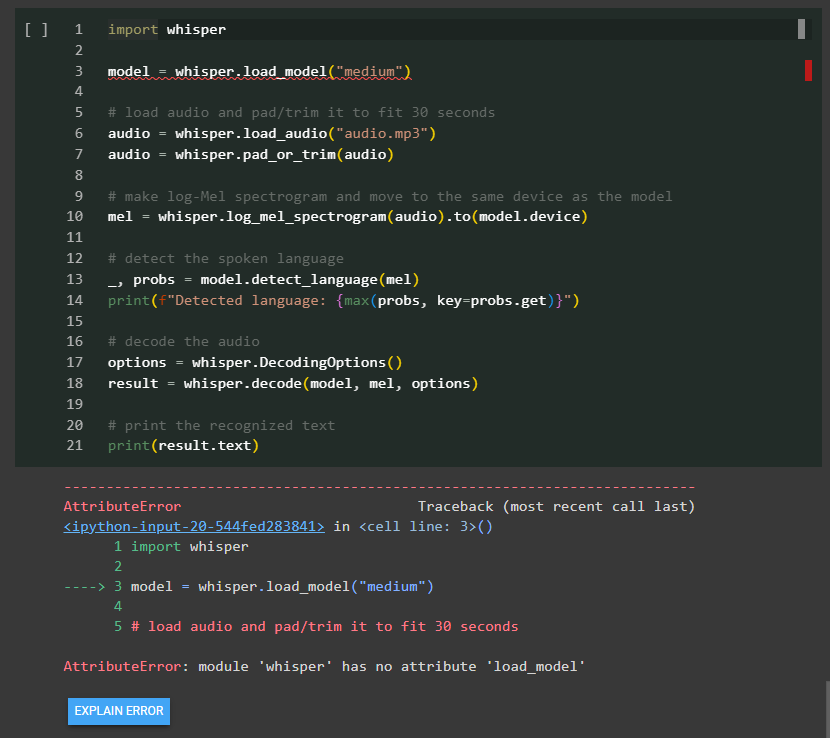

download the right whisper

I tried to run the tutorial from whisper documentation but couldn’t seem to get the first step running – call the load_model method. It turned out there is a discrepancy between the whisper library in pypi and the latest in github, the best way is to point pip to the github official account and you should be good to go. eg. pip install git+https://github.com/openai/whisper.git. Otherwise, you should the same error message as me below.

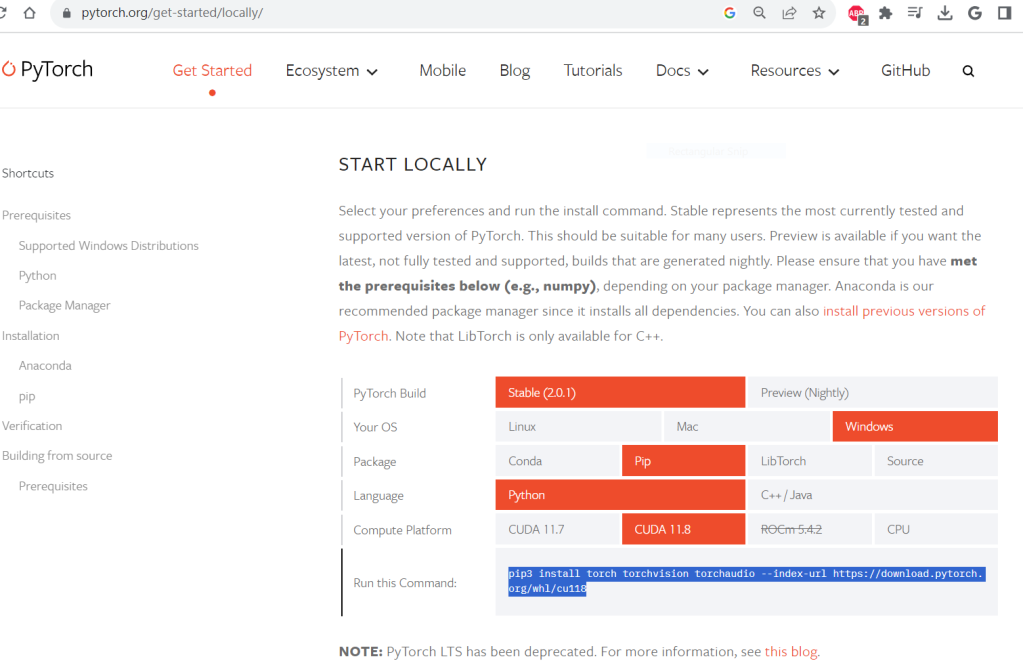

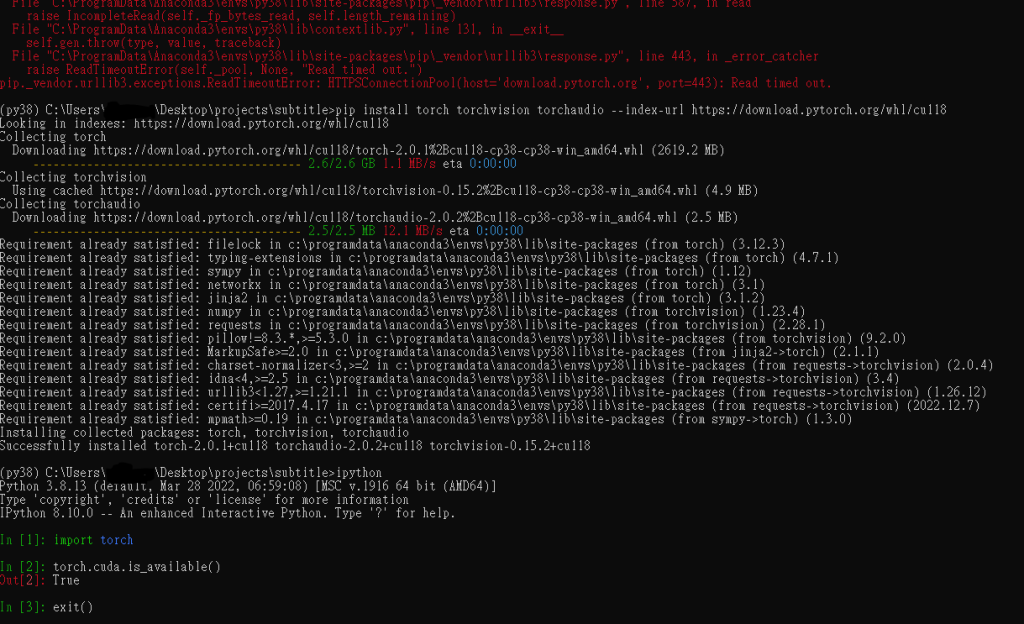

set up torch gpu correctly

whisper uses pytorch behind the scene, whisper offers you the option to run either cpu or gpu. In order to do it, we have to make sure the pytorch is properly installed and set up with the gpu enabled. Long story short, you should really follow the documentation from pytorch’s website. Otherwise, you can easily run into problems of installing cpu only version, outdated version, with the wrong cuda version or simply the wrong package (pip install torch, NOT pytorch)

For me, I had to uninstall torch all together first, then follow the pytorch site’s instruction to reinstall again. The installation is a bit time consuming downloading the 2.6GB from wheel for windows. For the first time, it even failed half way through but the second time is the magic. In hindsight, one could relax the timeout to avoid slow downloading related error. After all, you can run the code below to confirm the GPU is working perfectly with pytorch.

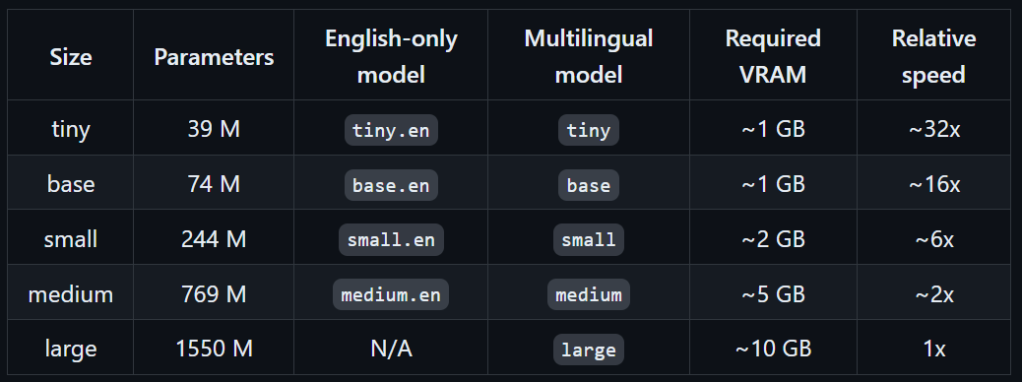

get the model

Whisper has a few different models, the bigger model has better performance but comes at the cost of a bigger size (storage/ram/network) and slower speed. Just keep in mind that the model will be downloaded the first time it is being used and you should expect a delay depending on your network.

transcribe

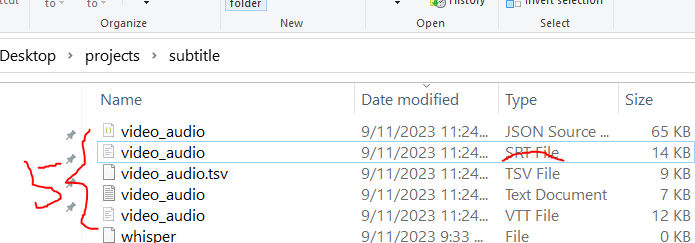

transcribe is as easy as “whisper audio.mp3”, in the end, you get 5 files.

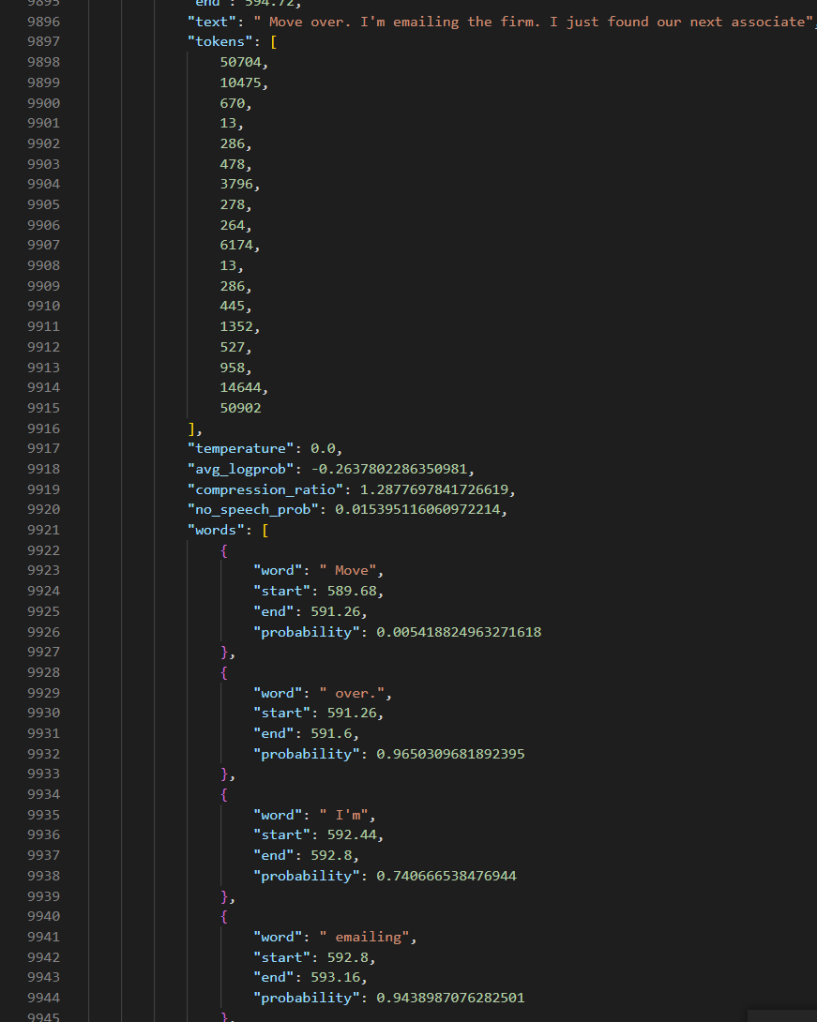

By enabling the word level timestamp “–word_timestamps True”, you can even get the word level prediction.

computing performance

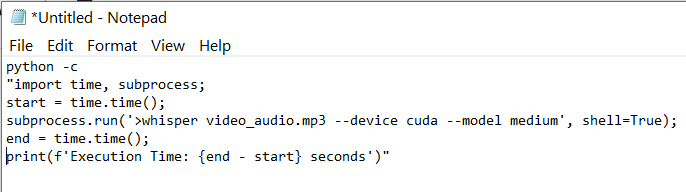

To measure the performance, I have to write up a small python script given windows lacks a timer like the time in Linux.

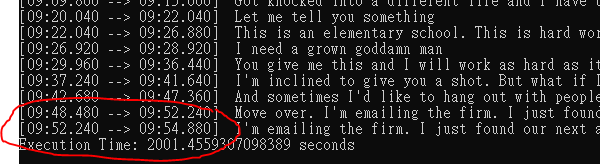

By using a GPU, it took 146seconds to transcribe 9:54 contents, which the ratio is about 3.9x. The bigger this ratio, the faster it is. By using cpu, it took 2001 seconds which is only 0.29x. Using GPU leads to a 10 times performance difference.

| description | time (s) | speed (time/594s) (“how long it takes to transcribe 1s”) | throughput (594s/time) (“in 1s, how long it can transcribe”) |

| medium gpu | 146 | 0.25 | 4x |

| medium cpu | 2001 | 3.37 | 0.3x |

| tiny gpu | 48/50 | 0.08 | 12x |

| base gpu | 40/40 | 0.07 | 15x |

| large gpu | 783/800 | 1.31 | 0.75 |

Summary

The overall experience working with whisper is quite pleasant, the setup, performance (computing and transcription quality wise) are definitely outstanding. This makes it a useful tool for many applications and if designed properly, the base model can be used for real time transcription.