Agent is a key component in langchain that serves the role of interacting with llm and execute tasks leveraging tools. This article documents my experience following this tutorial.

There are many types of agents in langchain, eg. conversational, functional, zero-shot react etc. In this example, we will be using openai functional. At a high level, openai function is an extension to the basic GPT LLM, it feeds a self-defined function to openai, openai will understand what it does and when to use it, through a natural language way, openai will parse the given questions, decide to answer the question requires invoking the user defined function, if so, it will return the parsed arguments and execute by calling the function. This is a good offering because there has been many scenarios where it is best to use specific tool to ensure the determinism by avoid hallucination that comes with language model.

Tutorial

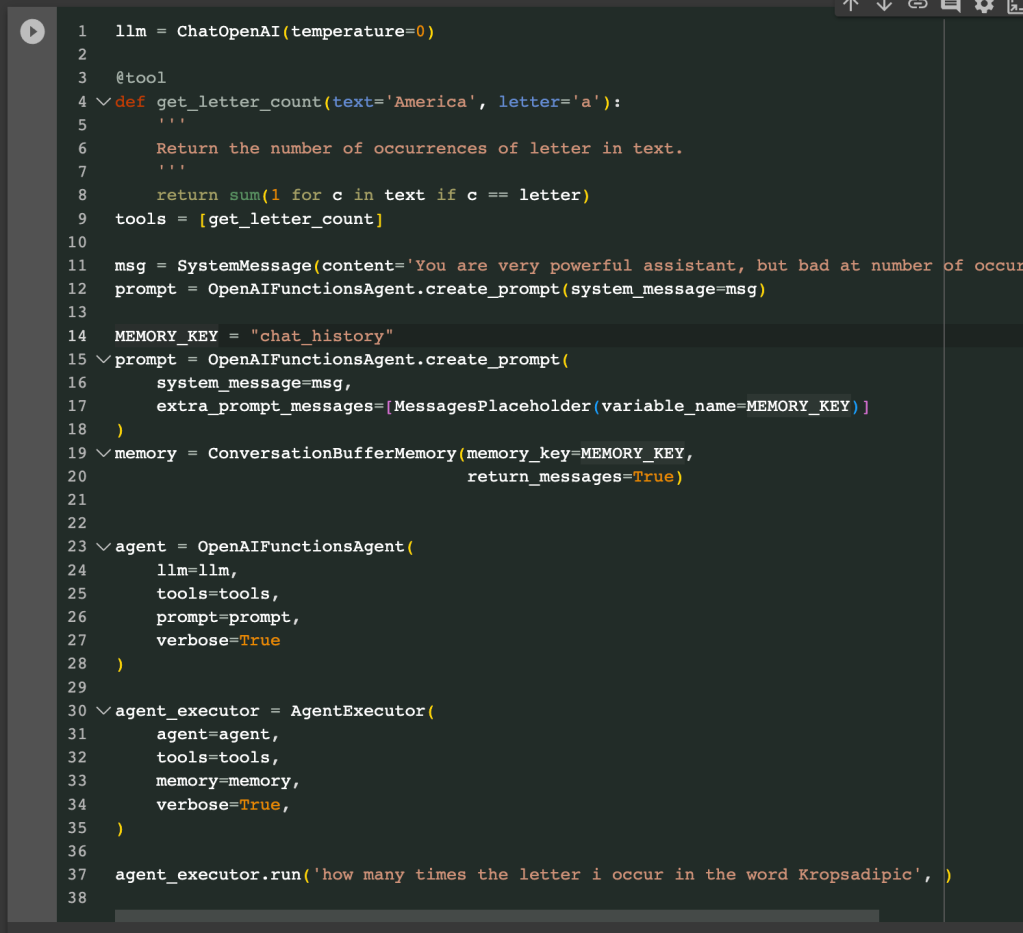

Instead of using the simple count letter example included in the tutorial, the first change I made is to test out what if there are two arguments in the function, interested in seeing whetheropenai function will be able to successfully extract and assign accordingly.

The tool decorator will register your function so that openai can recognize it. The doc string is also a required field to guide openai what is it used for and when to invoke it.

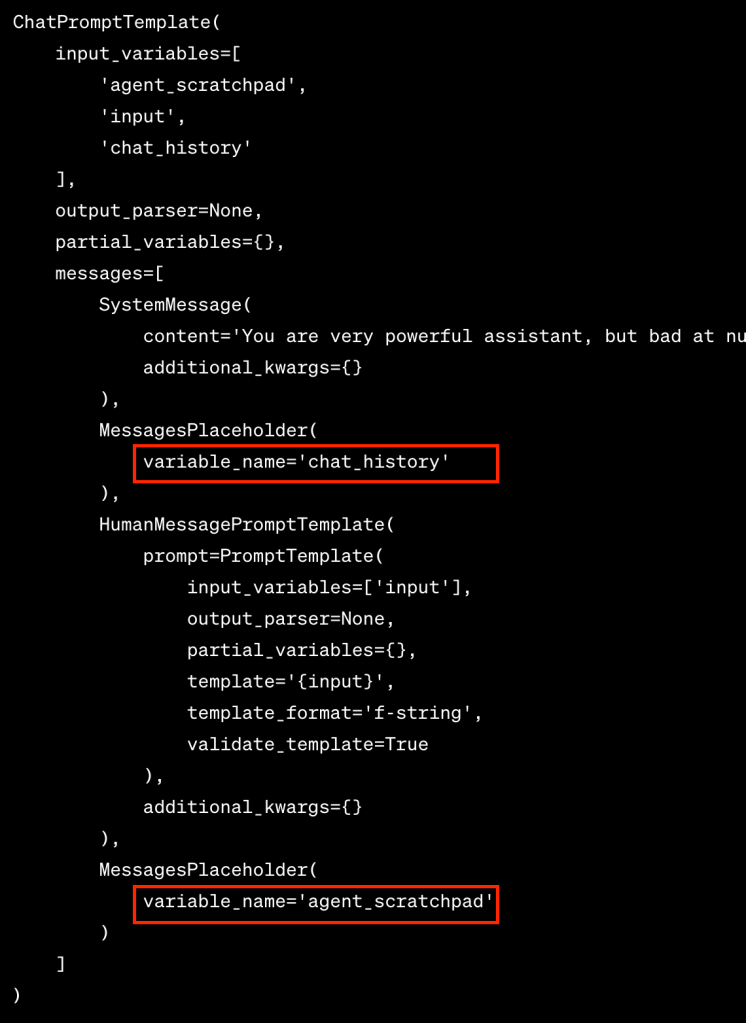

The agent creation requires the LLM, tools and a specific OpenAIFunctionsAgent prompt.

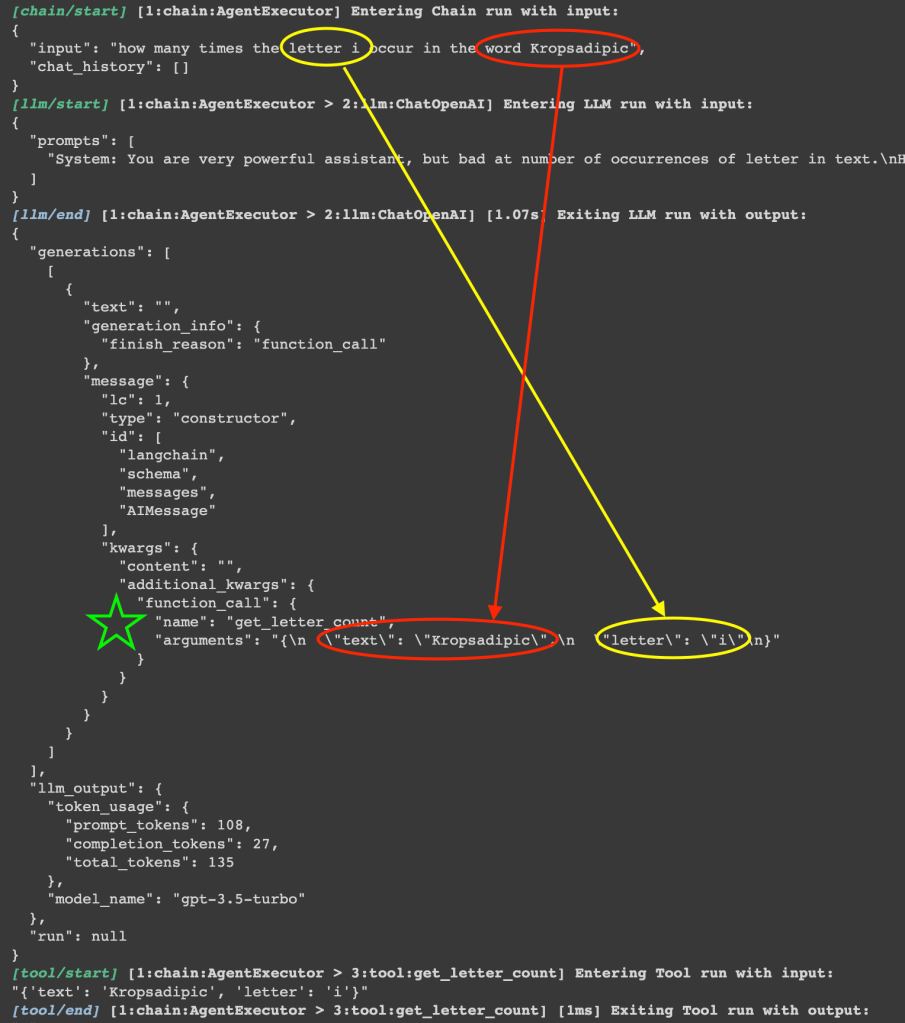

You can turn on the debug model by setting langchain.debug = True. When the debug mode is enabled, you see a lot of messages are being captured and returned to the screen.

Based on the debug messages, the question is being successfully parsed, and openai understands my question, realize its limitations of counting letters, decided to call the get_letter_count function and successfully parsed the question to extract the right both arguments (see screenshot above).

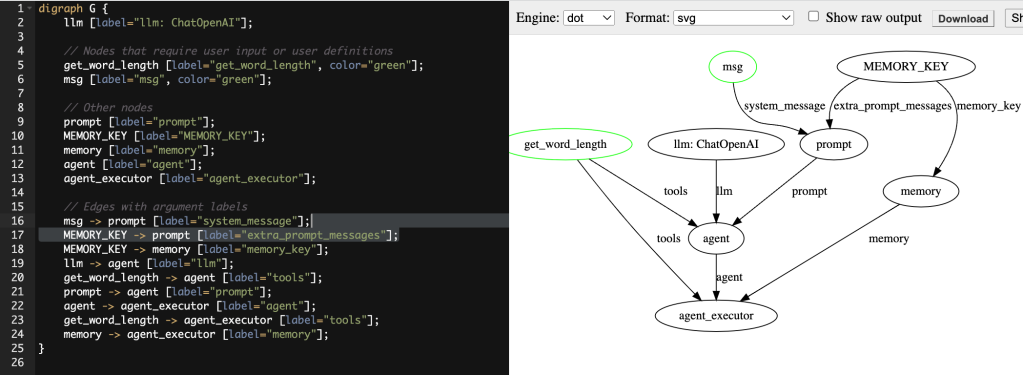

Code Dependencies (DAG of variables/functions)

There are indeed several steps in this simple example but most of the code are just boilerplate that you can simply copy and paste in your application, to visualize the inter-dependencies within all the components, here is a visualization in graphviz dot:

Based on this visualization, the only two places that requires your customization is the original function that you need to teach openai, and a basic system message that you guide openai under which scenario should the function be invoked.

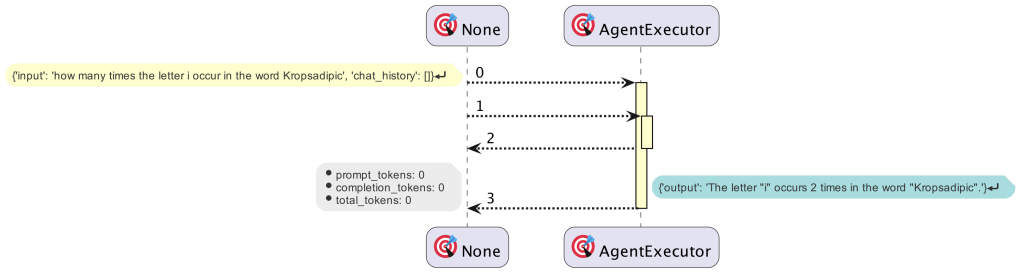

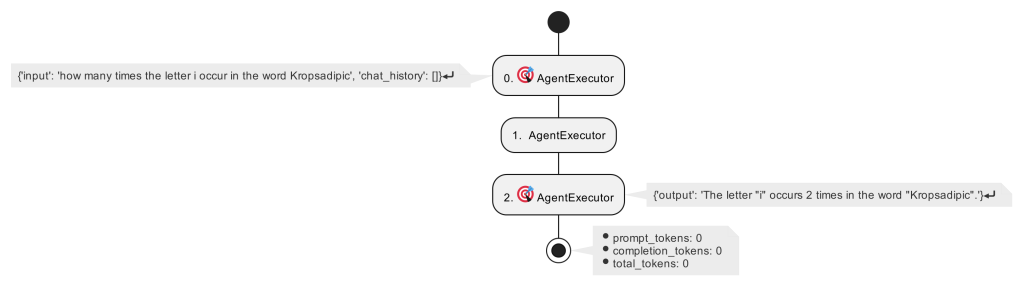

Visualizing using PlantUML

If you are interested in the execution sequence and activity, there is a library called langchain-plantuml that will using the callback method to capture all the events and later visualize it in this beautiful graphy way. On a side note, plantuml is a

Activity

Sequence