If you are still proud of the fact that you have years of hadoop map reduce development on your resume, you are out.

As you know in 2014, Apache Spark totally knocked out Hadoop at the Terasort benchmart test.

From the technical perspective, they are all cluster frame work but Spark is more memory intensive and therefore, its processing cap will be much lower than Hadoop. However, as the technology develops, the general memory limit for a commodity hardware could easily go beyond tens of GB, and it is not surprising that you have a server that has hundreds of RAM. In that case, if you have a small cluster of 10 servers, the theoretical capacity for Spark is 640 GB (64GB RAM each). Unless you are working for the companies who mainly focus on user activities at a very high level of granularity, any commonly used structured routine dataset like years of invoices, inventory history, pricing, .. could be easily fit into memory. In another way, a dataset with low billions of records could easily be processed by Apache Spark. Nowadays, services like AWS, Google Cloud also makes it really easy to pay for extra hardware as you go. Say you have a really really huge dataset that you want to take a look, simply bring up a cluster with customized size of memory and computing power and it is also fairly cost effective.

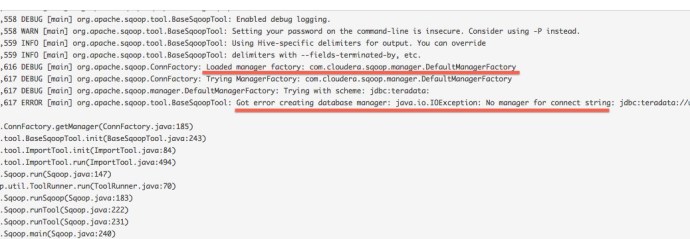

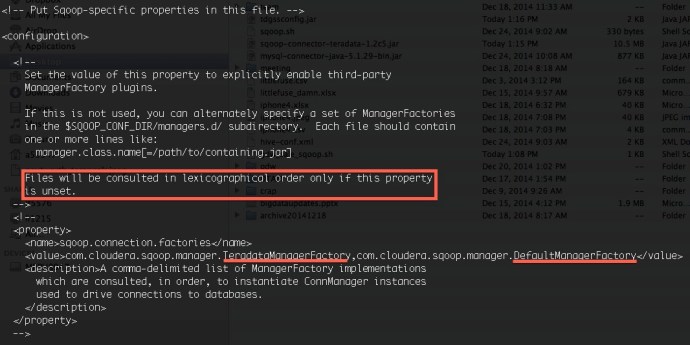

I have already spent a few hours on Spark and SparkSQL, it was a little bit PITA at the beginning to make sure that pySpark is properly set up (I am a heavy Python and R user) but after that, it is mainly a matter of time to get used to the Syntax of pyspark.

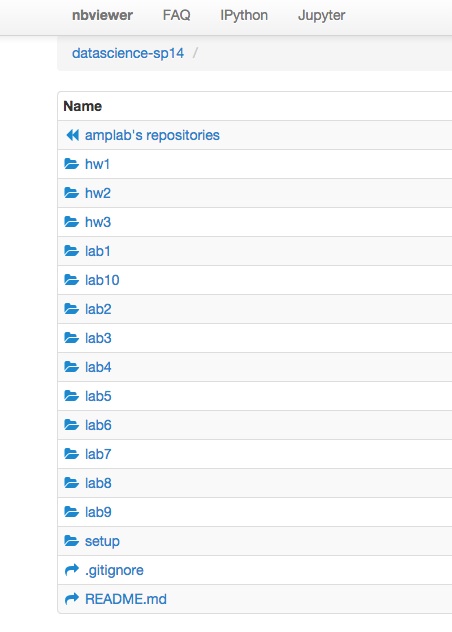

Here is a programming guide that I found really helpful. I highly recommend to try out every example and be familiar with every new term if you don’t know what they mean like (sc, rdd, flatmap…etc.)

The reason that I really like it because the philosophy there is a lot like Hadley Wickham’s dplyr package. You can easily chain the commonly used transformation operations like ‘filter, map(mutate), group_by…etc.’. And so far, I am playing around with a dataset that contains 250 million rows, I have not done that much performance tuning but the default performance out of box is fairly promising taking my small cluster into consideration (500-GB memory capacity). A map function (do some transformation on each line) generally takes less than 2 minutes.