analysts have always been a highly demanded job. Business users and stakeholders needs facts / insights to guide their day to day decision making or future planning. Usually it requires some technical capability to manipulate the data. This can be a time consuming and sometimes frustrating experience for most people, everything is urgent, everything is wrong, some can be helpful, and you are lucky if this exercise actually made a positive impact after all.

In the old world, you have your top guy/gal who is a factotum and knows a variety of toolkits like Excel, SQL, python, some BI experiences and maybe above and beyond, have some big data background. To be a good analyst, this person better be business friendly, have a beta personality with a quiet and curious mindset, can work hand in hand with your stakeholders and sift through all the random thoughts and turn it into a query that can fit into the data schema.

In past few years, there has been improvement in the computing framework that many data related work is democratized to a level anyone with a year or two engineering background can work with almost infinite amount of data at easy (cloud computing, spark, snowflake, even just pandas, etc), there are tools like PowerBI, Tableau or Excel that can have people query well formatted data themselves and even innovations like Thoughtspot, Alteryx, DataRobot to make it a turn-key solution.

Recently I came across a demo using langchain. Without any training, the solution can generate interesting thoughts and turn natural languages into working queries with mediocre outcomes.

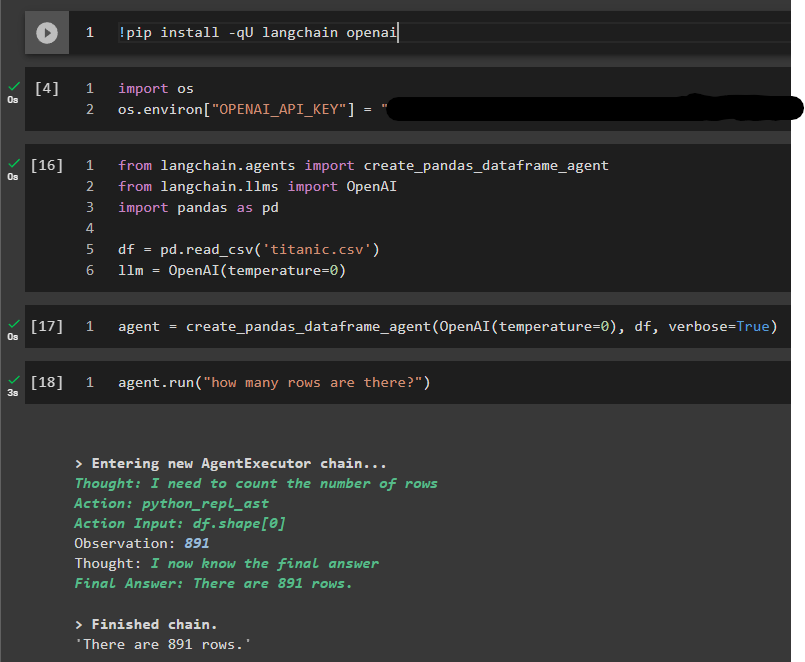

Set Up

The setup is extremely easy, sign up to an OpenAI account, download langchain and that is it.

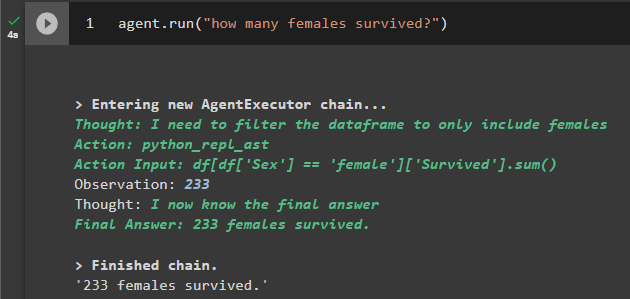

Once you create an agent, you can just call agent.run and ask any question you may have in plain language.

To confirm everything is working properly, we asked “how many rows are there” and got the answer of “891” for the titanic dataset.

Interesting Questions to Ask:

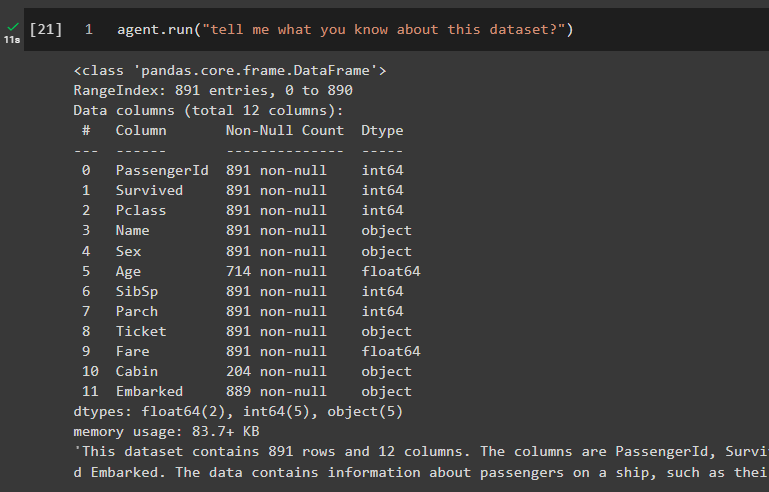

Schema

To get started, you can ask about the schema, typically people will do df.describe(), df.info() or df.dtypes

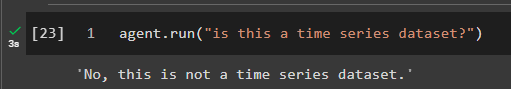

As the number of columns increases, it is sometimes time consuming to navigate and find the date column types, you can now just ask the dataset yourself.

Filter

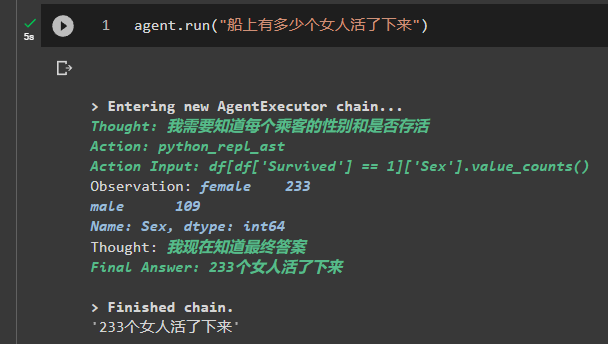

Thanks to openai, they even support multilingual. If you ask the question in Chinese, it will reply back in Chinese.

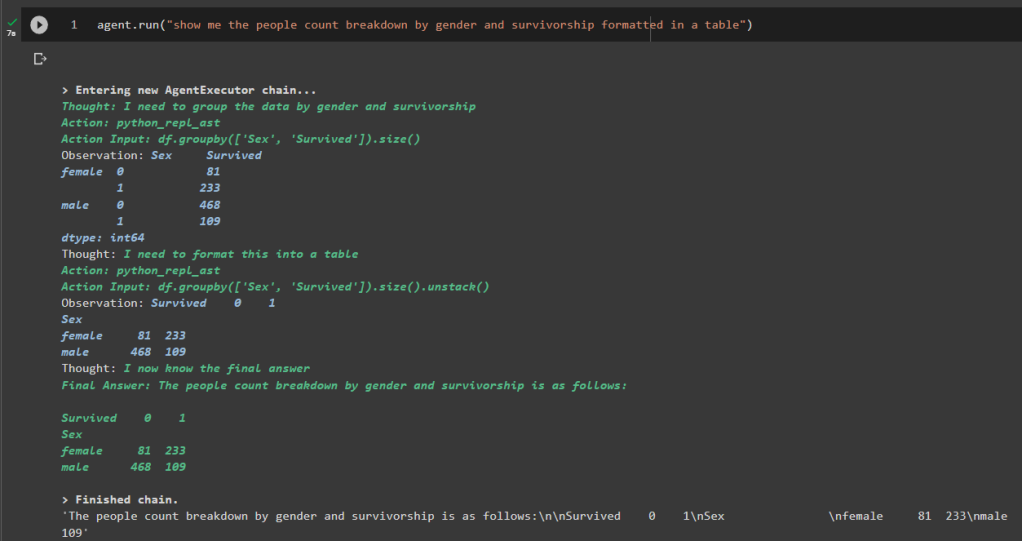

Aggregation

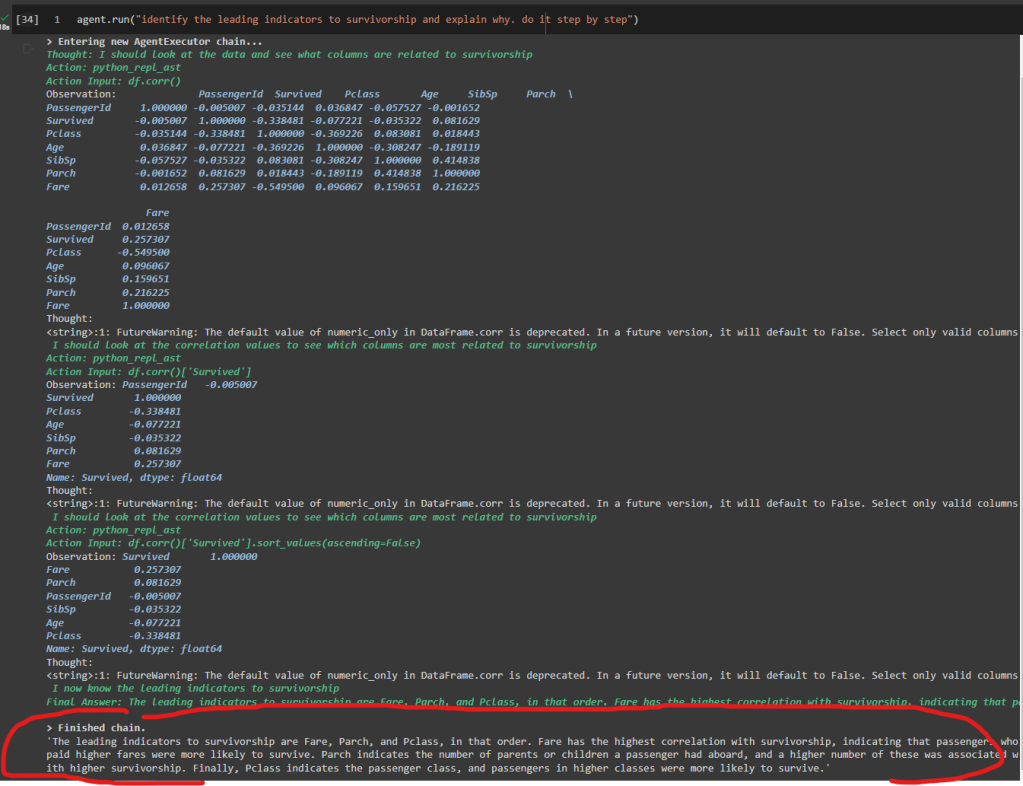

Correlation

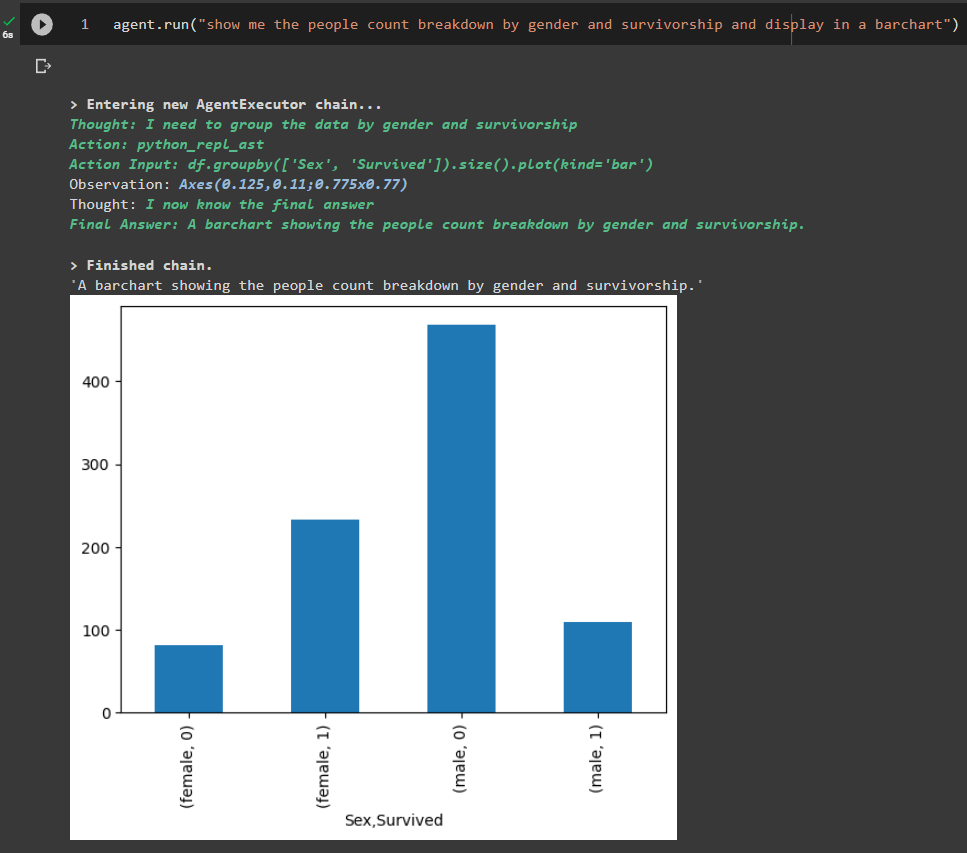

Visualization

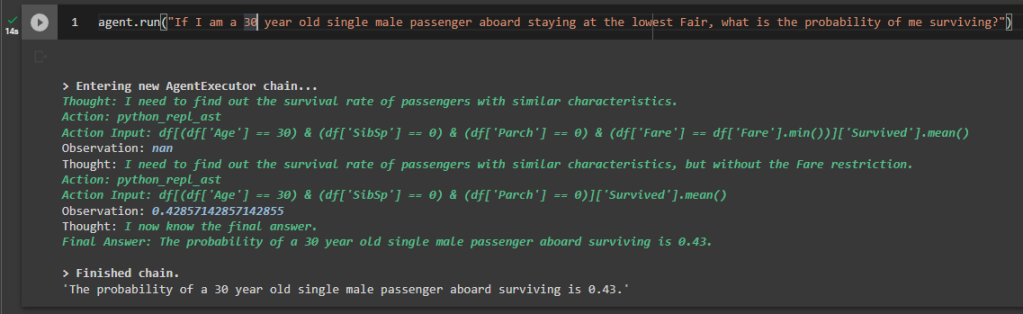

Machine Learning?

Not Perfect

There is an interesting observation that I asked the question of “how many women survived”, and I came across 3 different answers for the exact cell that I executed before. The first time I executed, the answer is wrong as it only returned how many females were aboard without taking into the survivorship, then after several other questions, the same answer got asked and somehow it returned 0. When I restarted the prompt by recreating the LLM, I got the correct answer.

Again like any other solutions, “Trust but verify”